Cousera机器学习基石第四周笔记-Machine-Learning-Foundation-Week-4-Note-in-Cousera

Feasibility of Learning

Learning is Impossible?

Probability to the Rescue

Inferring Something Unknow

in sample→out sample

Possible versus Probable

Hoeffding’s Inequality

In big sample(N large),υ is probably close to u (within ϵ) ℙ[|v−u|>ϵ] ≤ 2exp(−2ϵ2N) called Hoeffding’s Inequality, for marbles,coin,polling

the statement v = u is probably approximately correct(PAC)

valid for all N and ϵ

does not depend on u,no need to knowu

larger sample size N or looser gap ϵ→higher probability for v = u

if large N,can probably infer unknown u by know v

Connection to Learning

Added Components

for any fixed h, can probably infer unkown $E_out(h)=\underset{X\approx P}{\varepsilon}[h(x)\ne f(x)]$by known$ E_in(h)=^N_{n=1}[h(x)f(x)]$

The Formal Guarantee

if Ein(h) ≈ Eout(h) and$E_{in}(h)smallE_{out}(h)samllhf $with respect to P

Verification of One h

if Ein(h) small for the fixed h and A pick the h as g→ g=f PAC

if A force to pick THE h as g → Ein(h) almost always not small → g ≠ f PAC

real learning:

A shall make choices$\in \H$ (like PLA) rather than being forced to pick one h.

The ‘Verification’ Flow

Connection to Real Learning

BAD Sample and BAD Data

BAD Sample:$E_{out}=\frac{1}{2}$,but getting all heads(Ein = 0)

BAD Data for One h:Eout(h) and Einh far away

BAD data for Many h

BAD data for many h ⇔ no freedom of choice by A ⇔ there exists some h such that Eout(h) and Ein(h) far away

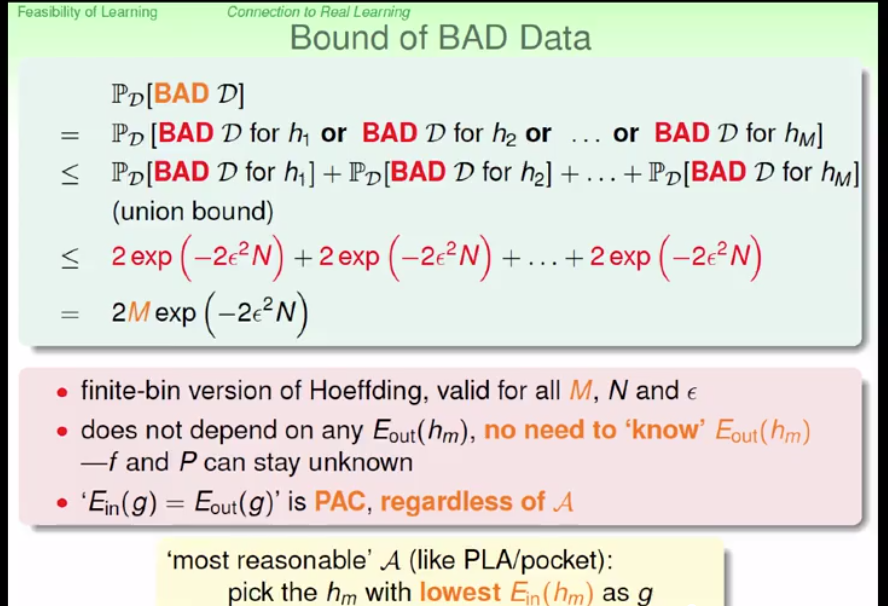

Bound of BAD Data

The Statistical Learning Flow

if |ℍ|= M finite, N large enough,for whatever g picked by A,Eout(g) ≈ Ein(g)

if A finds one g with Ein(g) ≈ 0,PAC guarantee forEout(g)⇒learning possible

M=∞? - see you in the next lectures~

吐槽

这个作业题是真的难啊,花了一个半小时才堪堪通过,尤其是最后几个写PLA和pocket算法的